Michael Pollock has a good article in today’s WSJ on the difficulty of relying on the standard metrics for mutual fund performance:

Michael Pollock has a good article in today’s WSJ on the difficulty of relying on the standard metrics for mutual fund performance:

If you’re trying to decide what mutual fund to buy, all sorts of rankings and performance figures are easily available. And you should look at most of them with a good deal of skepticism.

In short, here’s the problem with each of the five categories of performance or ranking that Pollock cites:

Total returns: “even if a fund has outperformed for 10 years, its odds of outperforming over the following three to five years are only about 50-50, research by Vanguard Group suggests.”

Benchmark comparisons: “Some stock and bond funds hold a mix of securities significantly different from those in their benchmarks.”

Rankings by total return: “the rankings are affected by statistical quirks, such as survivorship bias, which stem from fund closings, and the additions of newer funds to categories”

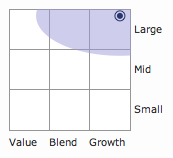

Category and style: The issue here is primarily one of taxonomy at Morningstar and Lipper. “Keeping comparisons apples-to-apples can be a challenge as the fund industry keeps launching new funds with differing strategies.”

Morningstar stars: “Morningstar gives funds one to five stars based on historical returns versus peers, adjusted for certain risk measures. Investors find the stars easy to navigate, but… they have limits.” This is related to the category challenge. Morningstar sometimes compares funds with very different mandates and sector concentration.

And remember, all five of these are performance indicators – and we know the problem of chasing hot performance. At Covestor, we think the following factors are at least as important: transparency – both in terms of the stocks you hold and the fees you pay – and the fund manager having skin in the game alongside you. Here’s how it works.

Source:

“Numbers You Can’t Count On” Michael A. Pollock, Wall Street Journal, 4/4/11

http://online.wsj.com/article/SB10001424052748704758904576188812777723344.html